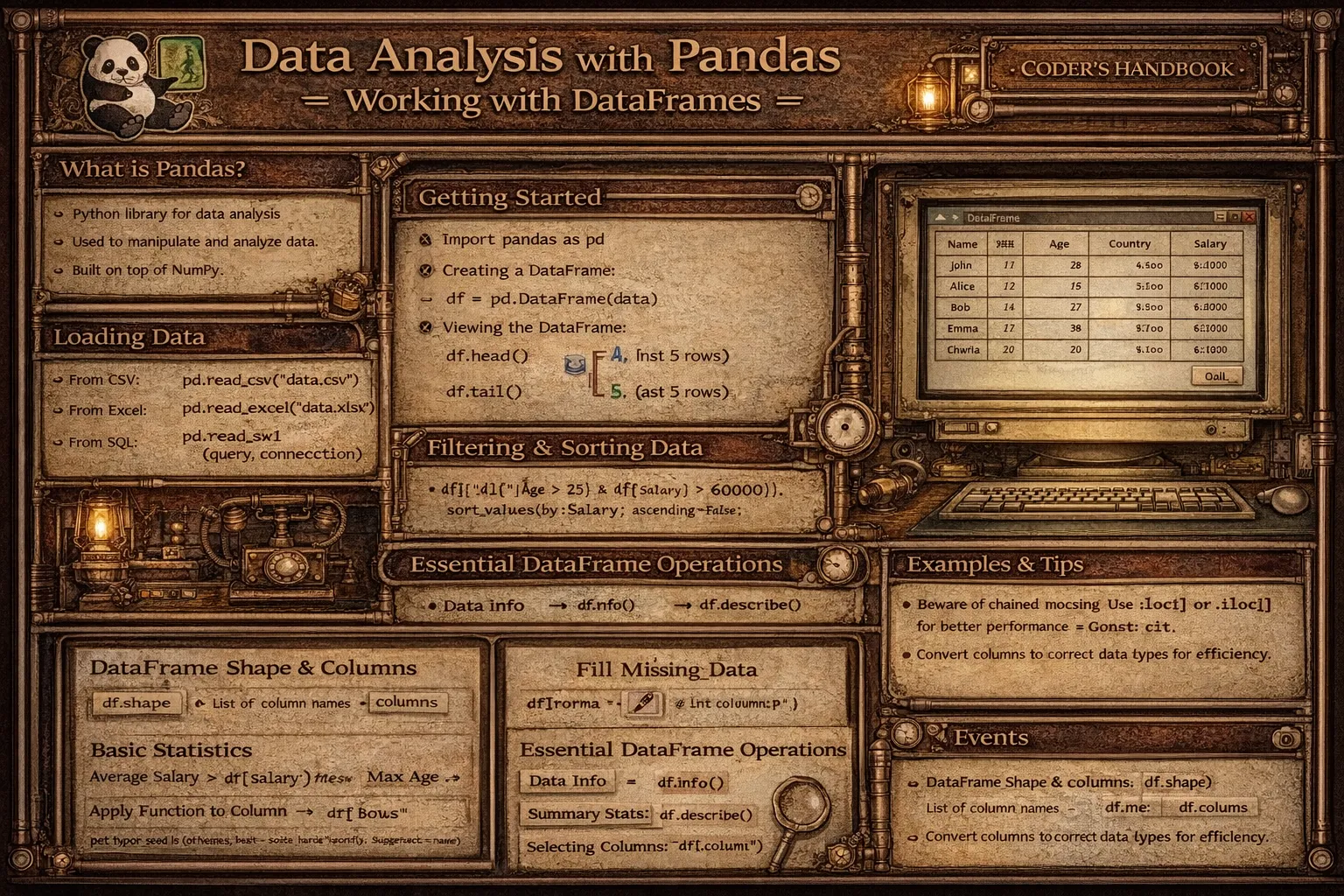

Data Analysis with Pandas: Working with DataFrames

Data analysis involves examining datasets to extract insights, identify patterns, and support decision-making, with Pandas providing Python's most powerful library for tabular data manipulation. Pandas introduces two fundamental data structures: Series representing one-dimensional labeled arrays similar to lists or columns, and DataFrame representing two-dimensional tabular data with rows and columns like spreadsheets or SQL tables. DataFrames enable efficient operations on structured data including filtering rows based on conditions, selecting columns, sorting data, handling missing values, aggregating statistics, and transforming datasets through intuitive APIs eliminating manual loops.

This comprehensive guide explores creating DataFrames from dictionaries with keys becoming columns, lists creating single columns, CSV files using read_csv() loading external data, and Excel files with read_excel(), accessing data with loc[] using labels for row and column selection, iloc[] using integer positions, and bracket notation selecting columns, filtering data with boolean indexing creating masks based on conditions, query() method using SQL-like syntax, and string methods filtering text columns, data manipulation adding columns through assignment or apply() function, removing columns with drop() method, renaming columns with rename(), and sorting with sort_values(), grouping and aggregation using groupby() splitting data by categories, aggregate functions including sum(), mean(), count(), and agg() applying multiple functions, pivot tables with pivot_table() reshaping data, and handling missing values with isna() detecting NaN values, fillna() replacing missing data, and dropna() removing incomplete rows. Whether you're analyzing sales data identifying trends, processing survey results, cleaning datasets for machine learning, generating business reports, or exploring scientific measurements, mastering Pandas DataFrames provides essential tools for data analysis enabling efficient manipulation of structured data supporting data-driven insights and decision-making.

Creating and Accessing DataFrames

Creating DataFrames involves constructing tabular data from various sources including Python dictionaries, lists, NumPy arrays, and external files. The pd.DataFrame() constructor accepts multiple input formats creating labeled two-dimensional structures. Accessing data uses loc[] for label-based indexing, iloc[] for position-based indexing, and column selection with bracket notation.

# Creating and Accessing DataFrames

import pandas as pd

import numpy as np

# === Creating DataFrames from dictionaries ===

# Dictionary with lists (columns)

data = {

'Name': ['Alice', 'Bob', 'Charlie', 'David', 'Eve'],

'Age': [25, 30, 35, 28, 32],

'City': ['New York', 'San Francisco', 'Los Angeles', 'Chicago', 'Boston'],

'Salary': [70000, 85000, 95000, 72000, 88000]

}

df = pd.DataFrame(data)

print("DataFrame from dictionary:")

print(df)

print()

# === Creating from lists ===

# List of lists

data_list = [

['Alice', 25, 'New York'],

['Bob', 30, 'San Francisco'],

['Charlie', 35, 'Los Angeles']

]

df_list = pd.DataFrame(data_list, columns=['Name', 'Age', 'City'])

print("DataFrame from list:")

print(df_list)

print()

# === Creating from NumPy array ===

array_data = np.array([[1, 2, 3], [4, 5, 6], [7, 8, 9]])

df_array = pd.DataFrame(array_data, columns=['A', 'B', 'C'])

print("DataFrame from NumPy array:")

print(df_array)

print()

# === Creating with custom index ===

df_custom = pd.DataFrame(

data,

index=['emp1', 'emp2', 'emp3', 'emp4', 'emp5']

)

print("DataFrame with custom index:")

print(df_custom)

print()

# === Reading from CSV ===

# Create sample CSV

df.to_csv('sample_data.csv', index=False)

# Read CSV

df_csv = pd.read_csv('sample_data.csv')

print("DataFrame from CSV:")

print(df_csv.head())

print()

# === Basic DataFrame information ===

print("DataFrame shape:", df.shape) # (rows, columns)

print("DataFrame size:", df.size) # Total elements

print("Column names:", df.columns.tolist())

print("Index:", df.index.tolist())

print("Data types:")

print(df.dtypes)

print()

# === DataFrame statistics ===

print("Describe (numeric columns):")

print(df.describe())

print()

print("Info:")

print(df.info())

print()

# === Accessing data with head() and tail() ===

print("First 3 rows:")

print(df.head(3))

print()

print("Last 2 rows:")

print(df.tail(2))

print()

# === Selecting columns ===

# Single column (returns Series)

names = df['Name']

print("Names (Series):")

print(names)

print(type(names))

print()

# Multiple columns (returns DataFrame)

subset = df[['Name', 'Salary']]

print("Name and Salary:")

print(subset)

print()

# === Accessing with loc[] (label-based) ===

# Single row by index

row = df.loc[0]

print("First row (loc):")

print(row)

print()

# Multiple rows

rows = df.loc[0:2] # Includes endpoint

print("Rows 0-2 (loc):")

print(rows)

print()

# Specific row and column

value = df.loc[0, 'Name']

print(f"Value at [0, 'Name']: {value}")

print()

# Multiple rows and columns

subset = df.loc[0:2, ['Name', 'Age']]

print("Subset with loc:")

print(subset)

print()

# All rows, specific columns

subset = df.loc[:, ['Name', 'Salary']]

print("All rows, Name and Salary:")

print(subset)

print()

# === Accessing with iloc[] (position-based) ===

# Single row by position

row = df.iloc[0]

print("First row (iloc):")

print(row)

print()

# Multiple rows

rows = df.iloc[0:3] # Excludes endpoint

print("Rows 0-2 (iloc):")

print(rows)

print()

# Specific row and column by position

value = df.iloc[0, 1] # First row, second column

print(f"Value at [0, 1]: {value}")

print()

# Multiple rows and columns by position

subset = df.iloc[0:3, [0, 3]] # First 3 rows, columns 0 and 3

print("Subset with iloc:")

print(subset)

print()

# === Iterating through DataFrame ===

print("Iterating through rows:")

for index, row in df.iterrows():

print(f"{row['Name']} is {row['Age']} years old")

print()

# === Setting values ===

# Set single value

df.loc[0, 'Age'] = 26

print("After updating age:")

print(df.head())

print()

# Set entire column

df['Country'] = 'USA'

print("After adding Country column:")

print(df.head())

print()

# Set values conditionally

df.loc[df['Age'] > 30, 'Category'] = 'Senior'

df.loc[df['Age'] <= 30, 'Category'] = 'Junior'

print("After adding Category:")

print(df)loc[] for label-based indexing (row/column names). Use iloc[] for position-based indexing (integer positions).Filtering and Data Manipulation

Filtering DataFrames selects rows meeting specific conditions using boolean indexing creating masks, query() method with SQL-like expressions, and string methods for text filtering. Data manipulation involves adding columns through assignment or calculations, removing with drop(), renaming with rename(), and sorting with sort_values() enabling data transformation and organization.

# Filtering and Data Manipulation

import pandas as pd

import numpy as np

# Create sample DataFrame

data = {

'Name': ['Alice', 'Bob', 'Charlie', 'David', 'Eve', 'Frank'],

'Age': [25, 30, 35, 28, 32, 27],

'City': ['New York', 'San Francisco', 'Los Angeles', 'Chicago', 'Boston', 'Seattle'],

'Salary': [70000, 85000, 95000, 72000, 88000, 76000],

'Department': ['Sales', 'Engineering', 'Sales', 'Engineering', 'Sales', 'Marketing']

}

df = pd.DataFrame(data)

print("Original DataFrame:")

print(df)

print()

# === Boolean indexing (filtering) ===

# Single condition

high_salary = df[df['Salary'] > 80000]

print("Employees with salary > 80000:")

print(high_salary)

print()

# Multiple conditions (AND)

young_high_earners = df[(df['Age'] < 30) & (df['Salary'] > 75000)]

print("Young high earners:")

print(young_high_earners)

print()

# Multiple conditions (OR)

sales_or_marketing = df[(df['Department'] == 'Sales') | (df['Department'] == 'Marketing')]

print("Sales or Marketing:")

print(sales_or_marketing)

print()

# NOT condition

not_sales = df[~(df['Department'] == 'Sales')]

print("Not in Sales:")

print(not_sales)

print()

# Using isin() for multiple values

selected_cities = df[df['City'].isin(['New York', 'Boston', 'Chicago'])]

print("Selected cities:")

print(selected_cities)

print()

# === String filtering ===

# Contains

contains_francisco = df[df['City'].str.contains('Francisco')]

print("Cities containing 'Francisco':")

print(contains_francisco)

print()

# Starts with

starts_with_s = df[df['City'].str.startswith('S')]

print("Cities starting with 'S':")

print(starts_with_s)

print()

# Case-insensitive filtering

contains_sales = df[df['Department'].str.lower() == 'sales']

print("Sales department (case-insensitive):")

print(contains_sales)

print()

# === Query method ===

# SQL-like filtering

filtered = df.query('Age > 28 and Salary < 90000')

print("Query result:")

print(filtered)

print()

# Using variables in query

min_age = 30

filtered = df.query('Age >= @min_age')

print(f"Age >= {min_age}:")

print(filtered)

print()

# === Adding columns ===

# Simple assignment

df['Bonus'] = df['Salary'] * 0.1

print("After adding Bonus column:")

print(df)

print()

# Conditional column

df['Senior'] = df['Age'] >= 30

print("After adding Senior column:")

print(df[['Name', 'Age', 'Senior']])

print()

# Using apply() with function

def categorize_salary(salary):

if salary < 75000:

return 'Low'

elif salary < 85000:

return 'Medium'

else:

return 'High'

df['Salary_Category'] = df['Salary'].apply(categorize_salary)

print("After adding Salary_Category:")

print(df[['Name', 'Salary', 'Salary_Category']])

print()

# Using lambda with apply

df['Name_Length'] = df['Name'].apply(lambda x: len(x))

print("After adding Name_Length:")

print(df[['Name', 'Name_Length']])

print()

# Combining multiple columns

df['Full_Info'] = df['Name'] + ' (' + df['City'] + ')'

print("After adding Full_Info:")

print(df[['Full_Info']].head())

print()

# === Removing columns ===

# Drop single column

df_dropped = df.drop('Bonus', axis=1)

print("After dropping Bonus:")

print(df_dropped.columns.tolist())

print()

# Drop multiple columns

df_dropped = df.drop(['Senior', 'Name_Length'], axis=1)

print("After dropping multiple columns:")

print(df_dropped.columns.tolist())

print()

# Drop in place

df.drop('Full_Info', axis=1, inplace=True)

print("Columns after in-place drop:")

print(df.columns.tolist())

print()

# === Renaming columns ===

# Rename single column

df_renamed = df.rename(columns={'Salary': 'Annual_Salary'})

print("After renaming:")

print(df_renamed.columns.tolist())

print()

# Rename multiple columns

df_renamed = df.rename(columns={

'Name': 'Employee_Name',

'Age': 'Employee_Age'

})

print("After renaming multiple:")

print(df_renamed.columns.tolist())

print()

# Rename all columns

df.columns = ['Emp_Name', 'Emp_Age', 'Emp_City', 'Emp_Salary', 'Emp_Dept', 'Emp_Bonus', 'Emp_Senior', 'Emp_Category']

print("After renaming all:")

print(df.columns.tolist())

# Reset column names

df.columns = ['Name', 'Age', 'City', 'Salary', 'Department', 'Bonus', 'Senior', 'Salary_Category']

print()

# === Sorting ===

# Sort by single column

sorted_df = df.sort_values('Age')

print("Sorted by Age (ascending):")

print(sorted_df[['Name', 'Age']])

print()

# Sort descending

sorted_df = df.sort_values('Salary', ascending=False)

print("Sorted by Salary (descending):")

print(sorted_df[['Name', 'Salary']].head())

print()

# Sort by multiple columns

sorted_df = df.sort_values(['Department', 'Salary'], ascending=[True, False])

print("Sorted by Department and Salary:")

print(sorted_df[['Name', 'Department', 'Salary']])

print()

# === Resetting index ===

df_reset = df.sort_values('Age').reset_index(drop=True)

print("After sorting and resetting index:")

print(df_reset[['Name', 'Age']].head())& for AND, | for OR, ~ for NOT. Always wrap conditions in parentheses: (df['A'] > 5) & (df['B'] < 10).Grouping and Aggregation

Grouping data with groupby() splits DataFrames by categorical variables enabling aggregate calculations on each group. Aggregation functions including sum(), mean(), count(), min(), max() compute statistics per group, while agg() applies multiple functions simultaneously. Understanding grouping enables summarizing data revealing patterns across categories.

# Grouping and Aggregation

import pandas as pd

import numpy as np

# Create sample DataFrame

data = {

'Department': ['Sales', 'Sales', 'Engineering', 'Engineering', 'Sales', 'Marketing', 'Marketing', 'Engineering'],

'Employee': ['Alice', 'Bob', 'Charlie', 'David', 'Eve', 'Frank', 'Grace', 'Henry'],

'Salary': [70000, 85000, 95000, 72000, 88000, 76000, 82000, 90000],

'Experience': [2, 5, 8, 3, 6, 4, 5, 7],

'Region': ['East', 'West', 'East', 'West', 'East', 'West', 'East', 'West']

}

df = pd.DataFrame(data)

print("Original DataFrame:")

print(df)

print()

# === Basic groupby ===

# Group by single column

grouped = df.groupby('Department')

# Get group names

print("Groups:", grouped.groups.keys())

print()

# Get specific group

sales_group = grouped.get_group('Sales')

print("Sales department:")

print(sales_group)

print()

# === Single aggregation ===

# Mean salary by department

mean_salary = df.groupby('Department')['Salary'].mean()

print("Mean salary by department:")

print(mean_salary)

print()

# Sum by department

total_salary = df.groupby('Department')['Salary'].sum()

print("Total salary by department:")

print(total_salary)

print()

# Count employees per department

count = df.groupby('Department').size()

print("Employee count by department:")

print(count)

print()

# === Multiple aggregations ===

# Multiple functions on single column

stats = df.groupby('Department')['Salary'].agg(['mean', 'min', 'max', 'sum'])

print("Salary statistics by department:")

print(stats)

print()

# Multiple columns, multiple functions

stats = df.groupby('Department').agg({

'Salary': ['mean', 'sum'],

'Experience': ['mean', 'max']

})

print("Multiple aggregations:")

print(stats)

print()

# Custom aggregation function

def salary_range(x):

return x.max() - x.min()

range_stats = df.groupby('Department')['Salary'].agg([

('Average', 'mean'),

('Range', salary_range),

('Count', 'count')

])

print("Custom aggregations:")

print(range_stats)

print()

# === Group by multiple columns ===

# Group by department and region

multi_group = df.groupby(['Department', 'Region'])['Salary'].mean()

print("Mean salary by department and region:")

print(multi_group)

print()

# Unstack for better view

multi_group_unstacked = df.groupby(['Department', 'Region'])['Salary'].mean().unstack()

print("Unstacked view:")

print(multi_group_unstacked)

print()

# === Transform (add aggregated values to original DataFrame) ===

# Add department average to each row

df['Dept_Avg_Salary'] = df.groupby('Department')['Salary'].transform('mean')

print("With department average:")

print(df[['Employee', 'Department', 'Salary', 'Dept_Avg_Salary']])

print()

# Calculate difference from department average

df['Diff_From_Avg'] = df['Salary'] - df['Dept_Avg_Salary']

print("Difference from average:")

print(df[['Employee', 'Salary', 'Dept_Avg_Salary', 'Diff_From_Avg']])

print()

# === Filter groups ===

# Keep groups with more than 2 employees

filtered = df.groupby('Department').filter(lambda x: len(x) > 2)

print("Departments with > 2 employees:")

print(filtered)

print()

# Keep groups where average salary > 80000

high_paying = df.groupby('Department').filter(lambda x: x['Salary'].mean() > 80000)

print("High-paying departments:")

print(high_paying)

print()

# === Pivot tables ===

# Simple pivot table

pivot = df.pivot_table(

values='Salary',

index='Department',

aggfunc='mean'

)

print("Pivot table - average salary:")

print(pivot)

print()

# Pivot with multiple dimensions

pivot_multi = df.pivot_table(

values='Salary',

index='Department',

columns='Region',

aggfunc='mean'

)

print("Pivot table - department x region:")

print(pivot_multi)

print()

# Pivot with multiple aggregations

pivot_agg = df.pivot_table(

values='Salary',

index='Department',

columns='Region',

aggfunc=['mean', 'count'],

fill_value=0

)

print("Pivot with multiple aggregations:")

print(pivot_agg)

print()

# === Value counts ===

# Count occurrences

dept_counts = df['Department'].value_counts()

print("Department value counts:")

print(dept_counts)

print()

# Value counts with percentage

dept_pct = df['Department'].value_counts(normalize=True) * 100

print("Department percentages:")

print(dept_pct)

print()

# === Cumulative aggregations ===

# Cumulative sum

df_sorted = df.sort_values('Experience')

df_sorted['Cumulative_Salary'] = df_sorted['Salary'].cumsum()

print("With cumulative salary:")

print(df_sorted[['Employee', 'Experience', 'Salary', 'Cumulative_Salary']])

print()

# Rolling mean (moving average)

df_sorted['Rolling_Avg_Salary'] = df_sorted['Salary'].rolling(window=3).mean()

print("With rolling average:")

print(df_sorted[['Employee', 'Salary', 'Rolling_Avg_Salary']]).reset_index() after groupby to convert grouped result back to DataFrame with regular columns.Handling Missing Values

Missing values appear as NaN (Not a Number) in DataFrames requiring special handling. Detection uses isna() or isnull() identifying missing entries, while fillna() replaces NaN with specified values including mean, median, or forward-fill, and dropna() removes rows or columns containing missing data. Proper handling of missing values ensures data quality and prevents errors in analysis.

# Handling Missing Values

import pandas as pd

import numpy as np

# Create DataFrame with missing values

data = {

'Name': ['Alice', 'Bob', None, 'David', 'Eve'],

'Age': [25, np.nan, 35, 28, np.nan],

'Salary': [70000, 85000, np.nan, 72000, 88000],

'City': ['New York', 'San Francisco', 'Los Angeles', None, 'Boston']

}

df = pd.DataFrame(data)

print("DataFrame with missing values:")

print(df)

print()

# === Detecting missing values ===

# Check for missing values

print("Missing values (isna):")

print(df.isna())

print()

# Count missing values per column

print("Missing value counts:")

print(df.isna().sum())

print()

# Percentage of missing values

print("Missing value percentages:")

print((df.isna().sum() / len(df)) * 100)

print()

# Check if any value is missing

print("Any missing values?", df.isna().any().any())

print()

# Rows with any missing value

rows_with_missing = df[df.isna().any(axis=1)]

print("Rows with missing values:")

print(rows_with_missing)

print()

# === Filling missing values ===

# Fill with specific value

df_filled = df.fillna(0)

print("Filled with 0:")

print(df_filled)

print()

# Fill with different values per column

df_filled = df.fillna({

'Name': 'Unknown',

'Age': df['Age'].mean(),

'Salary': df['Salary'].median(),

'City': 'Not Specified'

})

print("Filled with column-specific values:")

print(df_filled)

print()

# Forward fill (use previous value)

df_ffill = df.fillna(method='ffill')

print("Forward fill:")

print(df_ffill)

print()

# Backward fill (use next value)

df_bfill = df.fillna(method='bfill')

print("Backward fill:")

print(df_bfill)

print()

# Fill with mean for numeric columns

df_numeric_filled = df.copy()

for col in df.select_dtypes(include=[np.number]).columns:

df_numeric_filled[col].fillna(df[col].mean(), inplace=True)

print("Numeric columns filled with mean:")

print(df_numeric_filled)

print()

# === Interpolation ===

# Linear interpolation

df_interpolated = df.copy()

df_interpolated['Age'] = df_interpolated['Age'].interpolate()

print("Age column interpolated:")

print(df_interpolated)

print()

# === Dropping missing values ===

# Drop rows with any missing value

df_dropped = df.dropna()

print("After dropping rows with any NaN:")

print(df_dropped)

print()

# Drop rows where all values are missing

df_all_na = pd.DataFrame({

'A': [1, np.nan, 3],

'B': [np.nan, np.nan, np.nan],

'C': [7, 8, 9]

})

df_dropped_all = df_all_na.dropna(how='all')

print("After dropping rows where all are NaN:")

print(df_dropped_all)

print()

# Drop columns with any missing value

df_dropped_cols = df.dropna(axis=1)

print("After dropping columns with any NaN:")

print(df_dropped_cols)

print()

# Drop rows with missing values in specific columns

df_dropped_subset = df.dropna(subset=['Age', 'Salary'])

print("After dropping rows with NaN in Age or Salary:")

print(df_dropped_subset)

print()

# Drop rows with at least N non-null values

df_thresh = df.dropna(thresh=3) # Keep rows with at least 3 non-null values

print("Rows with at least 3 non-null values:")

print(df_thresh)

print()

# === Replacing values ===

# Replace specific value with NaN

df_replace = df.copy()

df_replace['City'] = df_replace['City'].replace('New York', np.nan)

print("After replacing 'New York' with NaN:")

print(df_replace)

print()

# Replace multiple values

df_replace = df.copy()

df_replace = df_replace.replace({

'New York': 'NYC',

'San Francisco': 'SF'

})

print("After replacing city names:")

print(df_replace)

print()

# === Checking for duplicates ===

data_dup = {

'Name': ['Alice', 'Bob', 'Alice', 'David'],

'Age': [25, 30, 25, 28]

}

df_dup = pd.DataFrame(data_dup)

print("DataFrame with duplicates:")

print(df_dup)

print()

# Find duplicates

print("Duplicate rows:")

print(df_dup.duplicated())

print()

# Show duplicate rows

print("Duplicate row values:")

print(df_dup[df_dup.duplicated()])

print()

# Drop duplicates

df_no_dup = df_dup.drop_duplicates()

print("After dropping duplicates:")

print(df_no_dup)

print()

# Drop duplicates based on specific columns

df_no_dup_name = df_dup.drop_duplicates(subset=['Name'])

print("After dropping duplicates by Name:")

print(df_no_dup_name)

print()

# === Complete data cleaning pipeline ===

def clean_dataframe(df):

"""Clean DataFrame by handling missing values and duplicates."""

df_clean = df.copy()

# Fill numeric columns with mean

numeric_cols = df_clean.select_dtypes(include=[np.number]).columns

for col in numeric_cols:

df_clean[col].fillna(df_clean[col].mean(), inplace=True)

# Fill categorical columns with mode (most frequent)

categorical_cols = df_clean.select_dtypes(include=['object']).columns

for col in categorical_cols:

df_clean[col].fillna(df_clean[col].mode()[0], inplace=True)

# Drop duplicates

df_clean.drop_duplicates(inplace=True)

# Reset index

df_clean.reset_index(drop=True, inplace=True)

return df_clean

df_cleaned = clean_dataframe(df)

print("Cleaned DataFrame:")

print(df_cleaned)

print("\nMissing values after cleaning:", df_cleaned.isna().sum().sum())Data Analysis Best Practices

- Always explore data first: Use

df.head(),df.info(),df.describe()understanding structure before analysis. Check data types, missing values, and distributions - Handle missing values appropriately: Choose strategy based on context: fill with mean/median for numeric, drop if few missing, or use domain knowledge determining best approach

- Use vectorized operations: Avoid loops when possible. Pandas vectorized operations significantly faster than iterating rows with

iterrows() - Chain operations for readability: Use method chaining creating readable pipelines:

df.query('Age > 30').groupby('Department')['Salary'].mean() - Reset index after groupby: Use

.reset_index()after aggregations converting multi-level indexes to regular columns for easier manipulation - Use appropriate data types: Convert columns to optimal types with

astype(). Usecategorydtype for categorical data saving memory - Save intermediate results: Export DataFrames to CSV after cleaning or transformation preventing re-computation:

df.to_csv('cleaned_data.csv') - Document assumptions: Add comments explaining filtering criteria, fill strategies, and transformations. Makes analysis reproducible and maintainable

- Validate results: Check aggregation results make sense. Use

assertstatements verifying expectations preventing logic errors - Use copy() to avoid warnings: Create explicit copies with

df.copy()when modifying DataFrames preventing SettingWithCopyWarning errors

.apply() only when vectorization impossible. Pandas optimized for vectorized operations.Conclusion

Data analysis with Pandas enables efficient manipulation of tabular data through DataFrames providing two-dimensional labeled structures supporting diverse operations. Creating DataFrames accepts multiple input formats including dictionaries with keys becoming columns, lists creating rows, NumPy arrays for numeric data, and reading external files with read_csv() loading CSV files, read_excel() for Excel spreadsheets, and read_json() for JSON data. Accessing data uses multiple methods with loc[] performing label-based indexing selecting rows and columns by names, iloc[] using position-based indexing with integer positions, bracket notation selecting columns returning Series or DataFrames, head() and tail() viewing beginning and end rows, and iterrows() iterating through rows though vectorized operations preferred for performance.

Filtering DataFrames uses boolean indexing creating masks with conditions like df[df['Age'] > 30] selecting rows meeting criteria, combining conditions with & for AND, | for OR, and ~ for NOT requiring parentheses around each condition, query() method using SQL-like syntax for readable filtering, string methods including contains(), startswith(), and endswith() filtering text columns, and isin() checking membership in lists. Data manipulation includes adding columns through assignment or apply() function executing custom logic, removing columns with drop() specifying axis=1, renaming with rename() accepting dictionaries mapping old to new names, sorting with sort_values() ordering by columns with ascending parameter, and resetting indexes with reset_index() after sorting. Grouping data with groupby() splits DataFrames by categorical variables enabling per-group analysis, aggregation functions including sum(), mean(), count(), min(), max() computing statistics, agg() applying multiple functions simultaneously with custom names, transform() adding group-level calculations to original DataFrame maintaining row count, filter() selecting groups meeting conditions, and pivot_table() reshaping data creating cross-tabulations. Handling missing values requires detecting NaN with isna() or isnull() identifying missing entries, filling with fillna() using specific values, column means, or forward/backward fill propagating adjacent values, interpolate() estimating missing values from surrounding data, dropna() removing rows or columns with missing data controlling strictness with thresh parameter, and replacing values with replace() substituting specific values. Best practices emphasize exploring data first using head(), info(), and describe() understanding structure, handling missing values appropriately choosing strategies based on context, using vectorized operations avoiding slow loops, chaining operations for readable pipelines, resetting indexes after groupby, using appropriate data types optimizing memory, saving intermediate results preventing re-computation, documenting assumptions for reproducibility, validating results ensuring correctness, and using copy() avoiding SettingWithCopyWarning errors. By mastering DataFrame creation and structure, data access methods, filtering and manipulation techniques, grouping and aggregation operations, missing value handling strategies, and best practices, you gain essential tools for data analysis enabling efficient processing of datasets supporting insights extraction, pattern identification, and data-driven decision-making across business analytics, scientific research, and data science workflows.

$ share --platform

$ cat /comments/ (0)

$ cat /comments/

// No comments found. Be the first!